While current watermarking technologies offer viable methods for content attribution and model protection, they continue to face significant technical challenges, particularly in balancing attack robustness against the fundamental trade-offs of detectability, content quality, and capacity.

What Are Robustness, Fidelity & Capacity?

Robustness

“Robustness” is a watermark’s ability to survive modifications, compression, format conversion, and deliberate removal attempts and attacks. Key characteristics of robustness include persistence (the watermark remains detectable even after multiple transformations), degradation tolerance (even if partially damaged, enough of the watermark survives to be identified), and statistical resilience (the watermark’s statistical properties remain distinguishable from noise).

Fidelity

“Fidelity” is how imperceptible the watermark is to humans and how little it degrades the quality of the content. Key characteristics of fidelity include imperceptibility (the watermark introduces no perceptible changes to the content quality), preservation of functionality (the watermarked content performs identically to the original in its intended use cases), and minimal distortion (quantifiable metrics like signal-to-noise ratio or perceptual distance remain within acceptable thresholds).

Capacity

“Capacity” is the amount of information that can be embedded in the watermark. Key characteristics of capacity include information payload (the amount of data that can be embedded within the watermark, typically measured in bits), scalability with content size (how capacity increases or remains stable as the host content grows larger), and redundancy requirements (the trade-off between embedding more information versus repeating the watermark for better detection reliability).

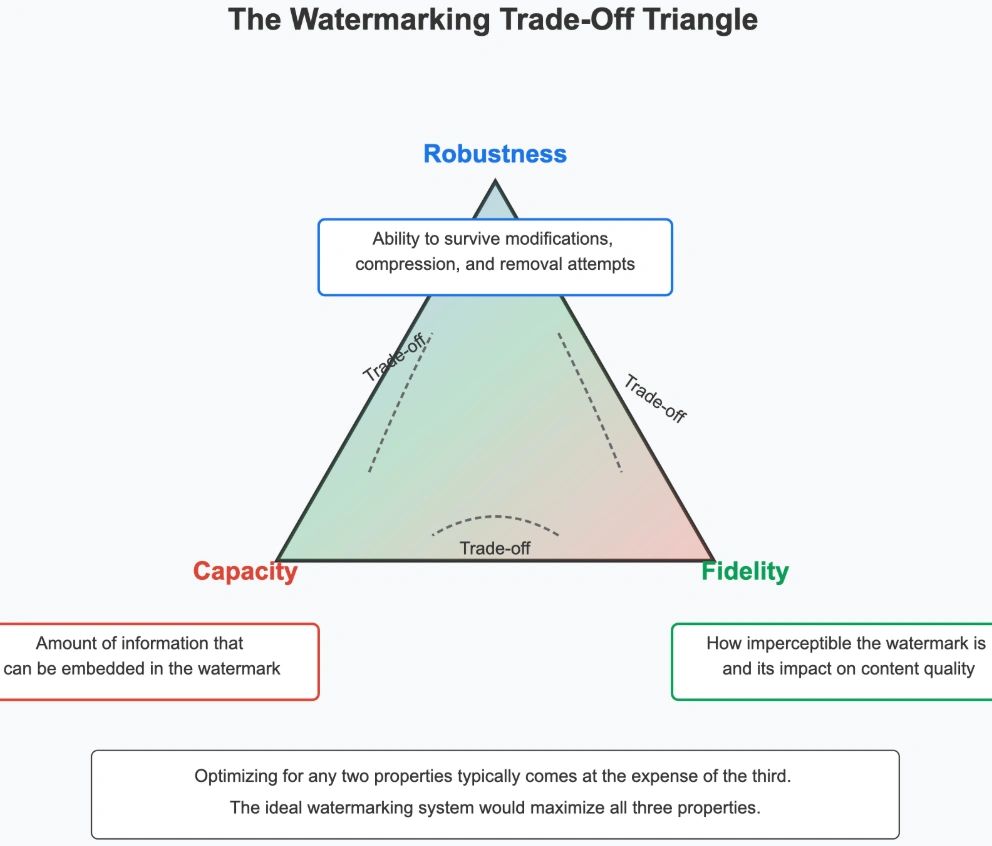

The Watermarking Trade-Off Triangle

Increasing robustness while maintaining high capacity often reduces fidelity (more noticeable watermarks), enhancing fidelity while preserving capacity typically reduces robustness (watermarks easier to remove), and maximizing robustness and fidelity usually limits capacity (less information can be embedded).

The ongoing challenge for watermarking systems is illustrated by the “Watermarking Trade-Off Triangle”, in which each corner represents a key property (robustness, fidelity, or capacity) and optimizing for any two properties typically comes at the expense of the third:

The Watermarking Trade-Offs

There is an inherent trade-off in watermarking systems: increasing robustness often reduces fidelity and capacity. In short, stronger watermarks that resist removal are more likely to degrade content quality (RAND, 2024). And, according to Cox et al. (2002) and Schramowski et al. (2023), stronger watermarks may introduce detectable artifacts, while higher capacity can make watermarks easier to spot and remove. Kirchenbauer et al. (2023), meanwhile, acknowledge the tradeoff between watermark detectability and content quality, noting that there is a “tradeoff between watermark strength (z-score) and text quality (perplexity) for various combinations of watermarking parameters.”

The relationship between watermark detectability and content quality presents a variety of tradeoffs, according to researchers:

Signal Strength vs. Perceptibility

More detectable watermarks require stronger signals embedded in content, which typically means more noticeable alterations that may affect quality or user experience (RAND, 2024).

Robustness vs. Quality

Watermarks resistant to removal or modification generally require more substantial alterations to the content, potentially compromising quality (DataCamp, 2025).

Detection Reliability vs. False Positives

Increasing detection sensitivity to catch more watermarked content typically increases false positive rates, incorrectly flagging human content as AI-generated (TechTarget, 2024).

Processing Overhead

More sophisticated watermarking and detection systems may introduce computational overhead, potentially affecting performance in real-time applications (Brookings, 2024).

User Experience Impact

Visible watermarks clearly identify AI-generated content, but can detract from content aesthetics and usability (Hugging Face, 2024).

Content Flexibility Limitations

Robust watermarking may limit how content can be edited, shared, or repurposed, potentially restricting legitimate uses (Access Now, 2023).

Application-Specific Requirements

As noted by Chakraborty et al. (2022), certain applications (like medical diagnostics or malware detection) have different tolerance levels for prediction inaccuracies, requiring watermarking approaches that prioritize content quality over detectability.

Final Thoughts

These tradeoffs highlight why watermarking alone isn’t a complete solution for AI content identification. The future effectiveness of watermarking will likely depend on multi-layered approaches that combine various watermarking techniques with complementary authentication mechanisms – like provenance tracking and blockchain verification.

Thanks for reading!